Artificial intelligence-generated deepfakes could be used to create fake political endorsements ahead of the General Election, or be used to sow broader confusion among voters, a study has warned.

Research by The Alan Turing Institute’s Centre for Emerging Technology and Security (Cetas) urged Ofcom and the Electoral Commission to address the use of AI to mislead the public, warning it was eroding trust in the integrity of elections.

The study said that while there was, so far, limited evidence that AI will directly impact election results, the researchers warned that there were early signs of damage to the broader democratic system, particularly through deepfakes causing confusion, or AI being used to incite hate or spread disinformation online.

It said the Electoral Commission and Ofcom should create guidelines and request voluntary agreements for political parties setting out how they should use AI for campaigning, and require AI-generated election material to be clearly marked as such.

The research team warned that currently, there was “no clear guidance” on preventing AI being used to create misleading content around elections.

Some social media platforms have already begun labelling AI-generated material in response to concerns about deepfakes and misinformation, and in the wake of a number of incidents of AI being used to create or alter images, audio or video of senior politicians.

In its study, Cetas said it had created a timeline of how AI could be used in the run-up to an election, suggesting it could be used to undermine the reputation of candidates, falsely claim that they have withdrawn or use disinformation to shape voter attitudes on a particular issue.

The study also said misinformation around how, when or where to vote could be used to undermine the electoral process.

Sam Stockwell, research associate at the Alan Turing Institute and the study’s lead author, said: “With a general election just weeks away, political parties are already in the midst of a busy campaigning period.

“Right now, there is no clear guidance or expectations for preventing AI being used to create false or misleading electoral information.

“That’s why it’s so important for regulators to act quickly before it’s too late.”

Dr Alexander Babuta, director of Cetas, said: “While we shouldn’t overplay the idea that our elections are no longer secure, particularly as worldwide evidence demonstrates no clear evidence of a result being changed by AI, we nevertheless must use this moment to act and make our elections resilient to the threats we face.

“Regulators can do more to help the public distinguish fact from fiction and ensure voters don’t lose faith in the democratic process.”

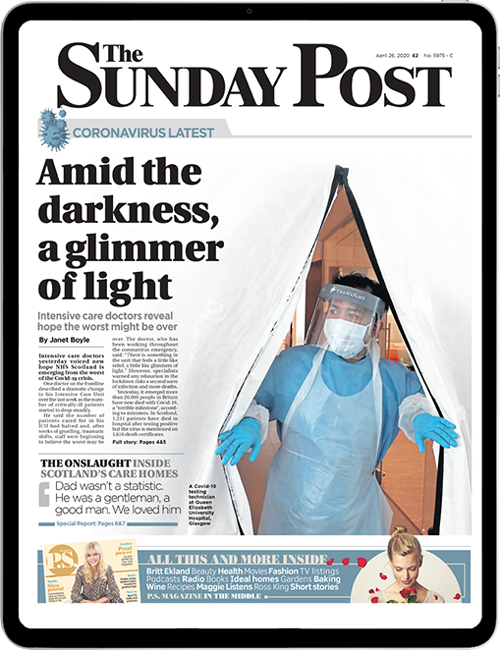

Enjoy the convenience of having The Sunday Post delivered as a digital ePaper straight to your smartphone, tablet or computer.

Subscribe for only £5.49 a month and enjoy all the benefits of the printed paper as a digital replica.

Subscribe