US President Joe Biden’s administration is pushing the tech industry and financial institutions to shut down a growing market of abusive sexual images made with artificial intelligence technology.

New generative AI tools have made it easy to transform someone’s likeness into a sexually explicit AI deepfake and share those realistic images across chatrooms or social media. The victims — be they celebrities or children — have little recourse to stop it.

The White House wants voluntary co-operation from companies in the absence of federal legislation. By committing to a set of specific measures, officials hope the private sector can curb the creation, spread and monetisation of such nonconsensual AI images, including explicit images of children.

“As generative AI broke on the scene, everyone was speculating about where the first real harms would come. And I think we have the answer,” said Mr Biden’s chief science adviser Arati Prabhakar, director of the White House’s Office of Science and Technology Policy.

She described to The Associated Press a “phenomenal acceleration” of nonconsensual imagery fuelled by AI tools and largely targeting women and girls.

“If you’re a teenage girl, if you’re a gay kid, these are problems that people are experiencing right now,” she said.

“We’ve seen an acceleration because of generative AI that’s moving really fast. And the fastest thing that can happen is for companies to step up and take responsibility.”

A document shared with AP ahead of its Thursday release calls for action from not just AI developers but payment processors, financial institutions, cloud computing providers, search engines and the gatekeepers — namely Apple and Google — that control what makes it onto mobile app stores.

The private sector should step up to “disrupt the monetisation” of image-based sexual abuse, restricting payment access particularly to sites that advertise explicit images of minors, the administration said.

Ms Prabhakar said many payment platforms and financial institutions already say that they will not support the kinds of businesses promoting abusive imagery.

“But sometimes it’s not enforced; sometimes they don’t have those terms of service,” she said. “And so that’s an example of something that could be done much more rigorously.”

Cloud service providers and mobile app stores could also “curb web services and mobile applications that are marketed for the purpose of creating or altering sexual images without individuals’ consent,” the document says.

And whether it is AI-generated or a real nude photo put on the internet, survivors should more easily be able to get online platforms to remove them.

The most widely known victim of pornographic deepfake images is Taylor Swift, whose ardent fanbase fought back in January when abusive AI-generated images of the singer-songwriter began circulating on social media. Microsoft promised to strengthen its safeguards after some of the Swift images were traced to its AI visual design tool.

A growing number of schools in the US and elsewhere are also grappling with AI-generated deepfake nudes depicting their students. In some cases, fellow teenagers were found to be creating AI-manipulated images and sharing them with classmates.

Last summer, the Biden administration brokered voluntary commitments by Amazon, Google, Meta, Microsoft and other major technology companies to place a range of safeguards on new AI systems before releasing them publicly.

That was followed by Mr Biden signing an ambitious executive order in October designed to steer how AI is developed so that companies can profit without putting public safety in jeopardy.

While focused on broader AI concerns, including national security, it nodded to the emerging problem of AI-generated child abuse imagery and finding better ways to detect it.

But Mr Biden also said the administration’s AI safeguards would need to be supported by legislation. A bipartisan group of US senators is now pushing Congress to spend at least 32 billion dollars (£25 billion) over the next three years to develop artificial intelligence and fund measures to safely guide it, though has largely put off calls to enact those safeguards into law.

Encouraging companies to step up and make voluntary commitments “doesn’t change the underlying need for Congress to take action here”, said Jennifer Klein, director of the White House Gender Policy Council.

Longstanding laws already criminalise making and possessing sexual images of children, even if they are fake. Federal prosecutors brought charges earlier this month against a Wisconsin man they said used a popular AI image-generator, Stable Diffusion, to make thousands of AI-generated realistic images of minors engaged in sexual conduct. A lawyer for the man declined to comment after his arraignment hearing Wednesday.

But there is almost no oversight over the tech tools and services that make it possible to create such images.

The Stanford Internet Observatory in December said it found thousands of images of suspected child sexual abuse in the giant AI database LAION, an index of online images and captions that’s been used to train leading AI image-makers such as Stable Diffusion.

London-based Stability AI, which owns the latest versions of Stable Diffusion, said this week that it “did not approve the release” of the earlier model reportedly used by the Wisconsin man. Such open-sourced models, because their technical components are released publicly on the internet, are hard to put back in the bottle.

Ms Prabhakar said it is not just open-source AI technology that is causing harm.

“It’s a broader problem,” she said. “Unfortunately, this is a category that a lot of people seem to be using image generators for. And it’s a place where we’ve just seen such an explosion. But I think it’s not neatly broken down into open source and proprietary systems.”

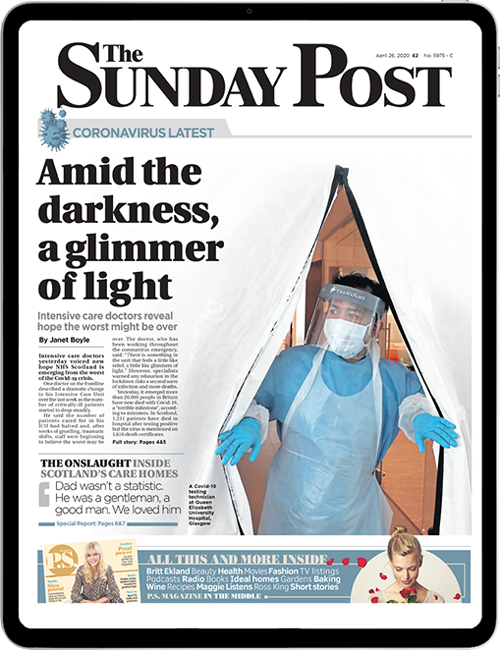

Enjoy the convenience of having The Sunday Post delivered as a digital ePaper straight to your smartphone, tablet or computer.

Subscribe for only £5.49 a month and enjoy all the benefits of the printed paper as a digital replica.

Subscribe