Google has said it is working to fix its new AI-powered image generation tool, after users claimed it was creating historically inaccurate images to over-correct long-standing racial bias problems within the technology.

Users of the Gemini generative AI chatbot have claimed that the app generated images showing a range of ethnicities and genders, even when doing so was historically inaccurate.

Several examples have been posted to social media, including where prompts to generate images of certain historical figures – such as the US founding fathers – returned images depicting women and people of colour.

We're aware that Gemini is offering inaccuracies in some historical image generation depictions. Here's our statement. pic.twitter.com/RfYXSgRyfz

— Google Communications (@Google_Comms) February 21, 2024

Google has acknowledged the issue, saying in a statement that Gemini’s AI image generation purposefully generates a wide range of people because the tool is used by people around the world and that should be reflected, but admitted the tool was “missing the mark here”.

“We’re working to improve these kinds of depictions immediately,” the company’s statement, posted to X, said.

“Gemini’s AI image generation does generate a wide range of people. And that’s generally a good thing because people around the world use it. But it’s missing the mark here.”

Jack Krawczyk, senior director for Gemini experiences at Google, said in a post on X: “We are aware that Gemini is offering inaccuracies in some historical image generation depictions, and we are working to fix this immediately.

“As part of our AI principles, we design our image generation capabilities to reflect our global user base, and we take representation and bias seriously.

“We will continue to do this for open ended prompts (images of a person walking a dog are universal!).

“Historical contexts have more nuance to them and we will further tune to accommodate that.”

He added that it was part of the “alignment process” of rolling out AI technology, and thanked users for their feedback.

Some critics have labelled the tool woke in response to the incident, while others have suggested Google has over-corrected in an effort to avoid repeating previous incidents involving artificial intelligence, racial bias and diversity.

There have been several examples in recent years involving technology and bias, including facial recognition software struggling to recognise, or mislabelling, black faces, and voice recognition services failing to understand accented English.

The incident comes as debate around the safety and influence of AI continues, with industry experts and safety groups warning AI-generated disinformation campaigns will likely be deployed to disrupt elections throughout 2024, as well as to sow division between people online.

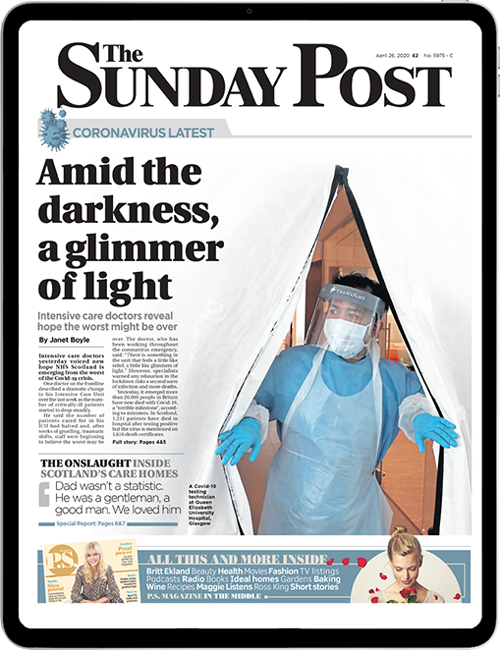

Enjoy the convenience of having The Sunday Post delivered as a digital ePaper straight to your smartphone, tablet or computer.

Subscribe for only £5.49 a month and enjoy all the benefits of the printed paper as a digital replica.

Subscribe